Google Making Robots More Helpful With Complex Requests From Humans

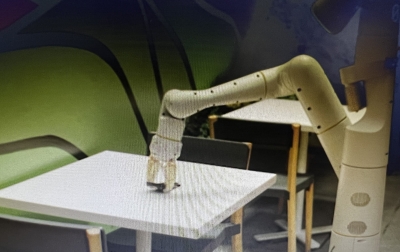

New Delhi, Aug 18: Google has developed a new, large-scale learning model that improves the robots overall performance and ability to execute more complex and abstract tasks as well as handle complex requests from people.

Called ‘PaLM-SayCan’, the Google-Everyday Robots research uses PaLM — or Pathways Language Model — in a robot learning model.

“This effort is the first implementation that uses a large-scale language model to plan for a real robot. It not only makes it possible for people to communicate with helper robots via text or speech, but also improves the robot’s overall performance,” the tech giant said in a blog post.

Today, robots by and large exist in industrial environments, and are painstakingly coded for narrow tasks.

This makes it impossible for them to adapt to the unpredictability of the real world.

“That’s why Google Research and Everyday Robots are working together to combine the best of language models with robot learning,” said Vincent Vanhoucke, Head of Robotics at Google Research.

The new learning model enables the robot to understand the way we communicate, facilitating more natural interaction.

“PaLM can help the robotic system process more complex, open-ended prompts and respond to them in ways that are reasonable and sensible,” Vanhoucke added.

When the system was integrated with PaLM, compared to a less powerful baseline model, the researchers saw a 14 per cent improvement in the planning success rate, or the ability to map a viable approach to a task.

“We also saw a 13 per cent improvement on the execution success rate, or ability to successfully carry out a task. This is half the number of planning mistakes made by the baseline method,” informed Vanhoucke.

The biggest improvement, at 26 per cent, is in planning long horizon tasks, or those in which eight or more steps are involved.

“With PaLM, we’re seeing new capabilities emerge in the language domain such as reasoning via chain of thought prompting. This allows us to see and improve how the model interprets the task,” said Google.

For now, these robots are just getting better at grabbing snacks for Googlers in the company’s micro-kitchens.

With IANS Inputs…